Found 19 talks width keyword dark energy

Abstract

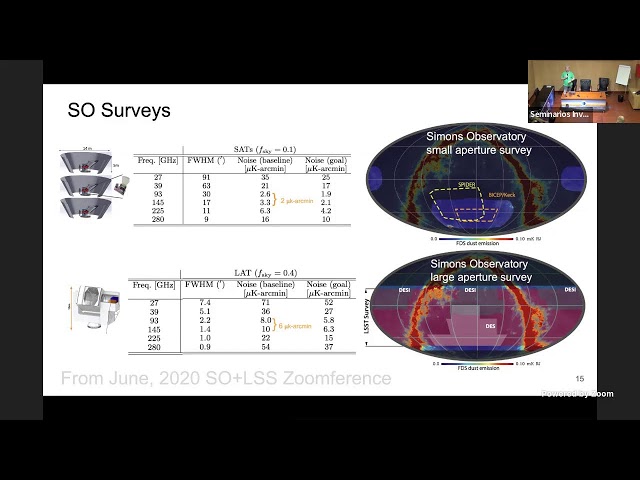

Simons Observatory (SO) is a new Cosmic Microwave Background telescope currently under construction in the Atacama Desert, close to ALMA and other recent CMB telescopes. It will have six small aperture (42cm) telescopes (SATs), and one large aperture (6m) telescope (LAT), observing at 30-280GHz (1-10mm) using transition edge sensors (TES) and kinetic inductance detectors (KIDs). As well as observing the polarisation of the CMB to unprecedented sensitivity, the LAT will perform a constant survey at higher angular resolution, enabling the systematic detection of transient sources in the submm, with large overlap of optical surveys such as LSST, DESI and DES. As well as giving an overview of SO, I summarise the types of transient sources that are expected to be seen by SO, including flaring stars, quasars, asteroids, and man-made satellites.

Abstract

I present the recent results obtained using the updated version of MG-MAMPOSSt, a code that constrains modified gravity (MG) models viable at cosmological scales using determination of galaxy cluster mass profiles with kinematics and lensing analyses. I will discuss limitations and future developments of this method in view of upcoming imaging and spectroscopic surveys, as well as the possibilities of including X-ray data to break degeneracy among model parameters. Finally I will show preliminary results about the constraints that can be obtained on the inner slope of dark matter profiles when adding the velocity dispersion of the Brightest Central Galaxy (BCG) in the dataset of MG-MAMPOSSt.

Abstract

Cosmological observations (redshifts, cosmic microwave background radiation, abundance of light elements, formation and evolution of galaxies, large-scale structure) find explanations within the standard Lambda-CDM model, although many times after a number of ad hoc corrections. Nevertheless, the expression ‘crisis in cosmology’ stubbornly reverberates in the scientific literature: the higher the precision with which the standard cosmological model tries to fit the data, the greater the number of tensions that arise. Moreover, there are alternative explanations for most of the observations. Therefore, cosmological hypotheses should be very cautiously proposed and even more cautiously received.

There are also sociological and philosophical arguments to support this scepticism. Only the standard model is considered by most professional cosmologists, while the challenges of the most fundamental ideas of modern cosmology are usually neglected. Funding, research positions, prestige, telescope time, publication in top journals, citations, conferences, and other resources are dedicated almost exclusively to standard cosmology. Moreover, religious, philosophical, economic, and political ideologies in a world dominated by anglophone culture also influence the contents of cosmological ideas.

Abstract

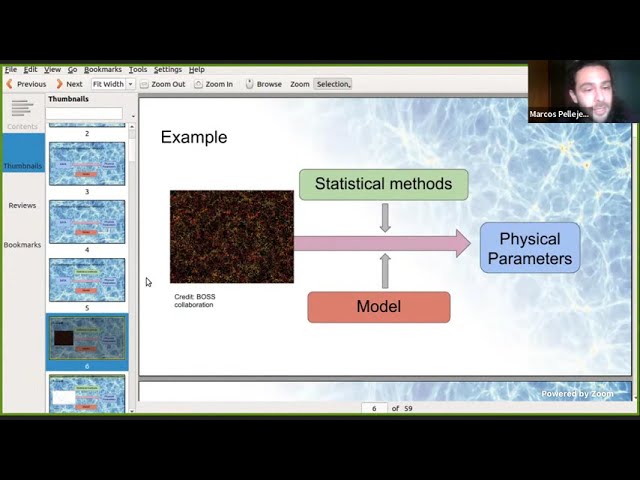

A key problem that we are facing in cosmology nowadays is that we cannot make accurate predictions with our current theoretical models. We have all of the pieces of the standard model but it doesn't have an analytical solution. The only way to have accurate predictions is to run a cosmological simulation. Then, why not use these simulations as the theory model? Well, for one main reason, if we want to explore the full parameter space comprised in the standard model, we need thousands of such simulations, and they are terribly computationally expensive. We wouldn't be able to do it in years! In this talk, I will tell you how in the last few years we have come up with a way to circumvent this problem.

Abstract

Abstract

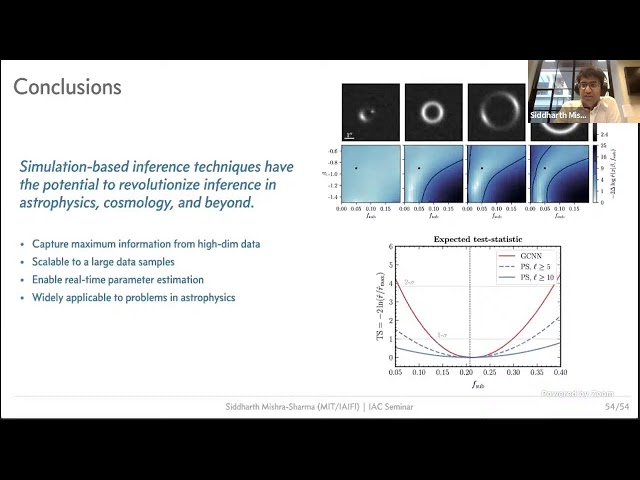

The next decade will see a deluge of new cosmological data that will enable us to accurately map out the distribution of matter in the local Universe, image billions of stars and galaxies to unprecedented precision, and create high-resolution maps of the Milky Way. Signatures of new physics as well as astrophysical processes of interest may be hiding in these observations, offering significant discovery potential. At the same time, the complexity of astrophysical data provides significant challenges to carrying out these searches using conventional methods. I will describe how overcoming these issues will require a qualitative shift in how we approach modeling and inference in cosmology, bringing together several recent advances in machine learning and simulation-based (or likelihood-free) inference. I will ground the talk through examples of proposed analyses that use machine learning-enabled simulation-based inference with an aim to uncover the identity of dark matter, while at the same time emphasizing the generality of these techniques to a broad range of problems in astrophysics, cosmology, and beyond.

https://rediris.zoom.us/j/83193959785?pwd=TExXSDJ6UDg5a24yWDM1TnlOWkNTZz09

Meeting ID: 831 9395 9785

Passcode: 343950O

YouTube: https://youtu.be/1Nkzn-cGaIo

Abstract

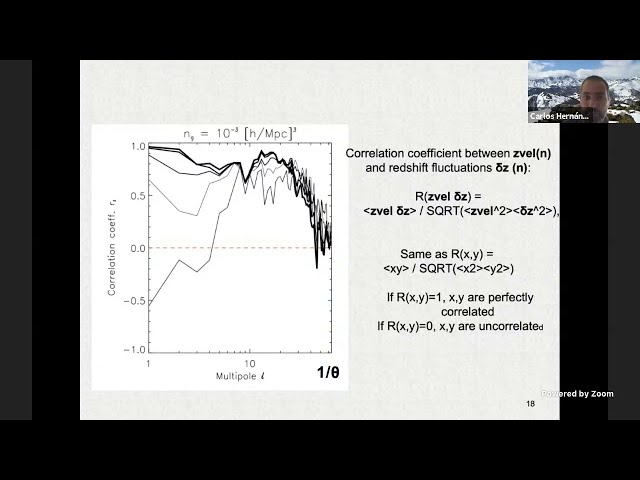

In cosmology, it is customary to convert observed redshifts into distances in order to study the large scale distribution of matter probes like galaxies and quasars, and to obtain cosmological constraints thereof. In this talk, I describe a new approach which bypasses such conversion and studies the "field of redshifts" as a new cosmological observable, dubbed thereafter as angular redshift fluctuations (ARF). By comparing linear theory predictions to the output of N-body cosmological simulations, I will show how the ARF are actually sensitive to both the underlying density and radial peculiar velocity fields in the universe, and how one can obtain cosmological and astrophysical constraints from them. And since "the prove of the pudding is in the eating", I will demonstrate how ARF provide, under a very simple setup, competitive constraints on the nature of peculiar velocities and gravity from BOSS DR13 data. Furthermore, I will also show that by combining ARF with maps of the cosmic microwave background (CMB), we can unveil the signature of the missing (and moving) baryons, doubling the amount of detected baryons in disparate cosmic epochs ranging from z=0 up to z=5, and providing today's most precise description of the spatial distribution of baryons in the universe.

Abstract

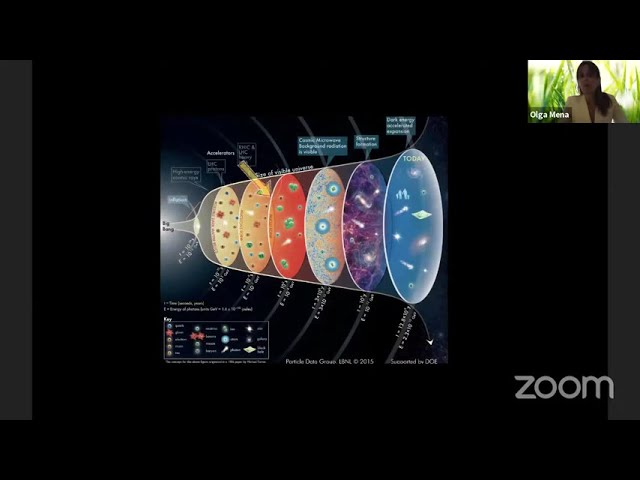

In this talk, we shall review the impact of the neutrino properties on the different cosmological observables. We shall also present the latest cosmological constraints on the neutrino masses and on the effective number of relativistic species. Special attention would be devoted to the role of neutrinos in solving the present cosmological tensions.

Abstract

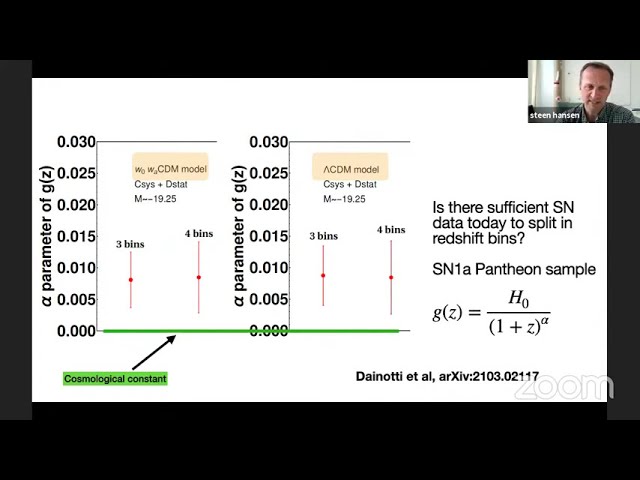

The expansion of the Universe is in an accelerated phase. This

acceleration was first estabilished by observations of SuperNovae, and

has since been confirmed through a range of independent observations.

The physical cause of this acceleration is coined Dark Energy, and

most observations indicate that Einsteins cosmological constant

provides a very good fit. In that case, approximately 70% of the

energy of the Universe presently consists of this cosmological

constant.

I will in this talk address the possibility that there may exist other

possible causes of the observed acceleration. In particular will I

discuss a concrete model, inspired by the well-known Lorentz force in

electromagnetism, where Dark Matter causes the acceleration. With a

fairly simple numerical simulation we find that the model appears

consistent with all observations.

In such a model, where Dark Matter properties causes the acceleration

of the Universe, there is no need for a cosmological constant.

Abstract

The concordance model of cosmology with its constituents dark matter and dark Energy is an established description of some anomalous observations. However, a series of additional contradictions indicate that the current view is far from satisfactory. Rather than describing observations with new numbers, it is argued that science should reflect its method, considering the fact that real progress was usually achieved by simplification. History, not only with the example of the epicycles, has shown many times that creating new ad-hoc concepts dominated over putting in doubt what had been established earlier. Also critical astrophysicists often believe that lab-tested particle physics has reliable evidence for its model. It is argued instead that the very same sociological and psychological mechanisms have been at work and brought particle physics in a still more deperate situation long ago. As an example, a couple of absurdities of the recent Higgs boson announcements are outlined. It seems inevitable that physics needs a new culture of data evaluation, raw data and source code must become equally transparent and openly accessible.

« Newer 1 | 2 Older » Last >>

Upcoming talks

- High-accuracy spectral modeling and chemical abundances for the oldest starsDr. Junbo ZhangThursday December 11, 2025 - 10:30 GMT (Aula)